at

Key Takeaways

Why AI evaluation is no longer optional

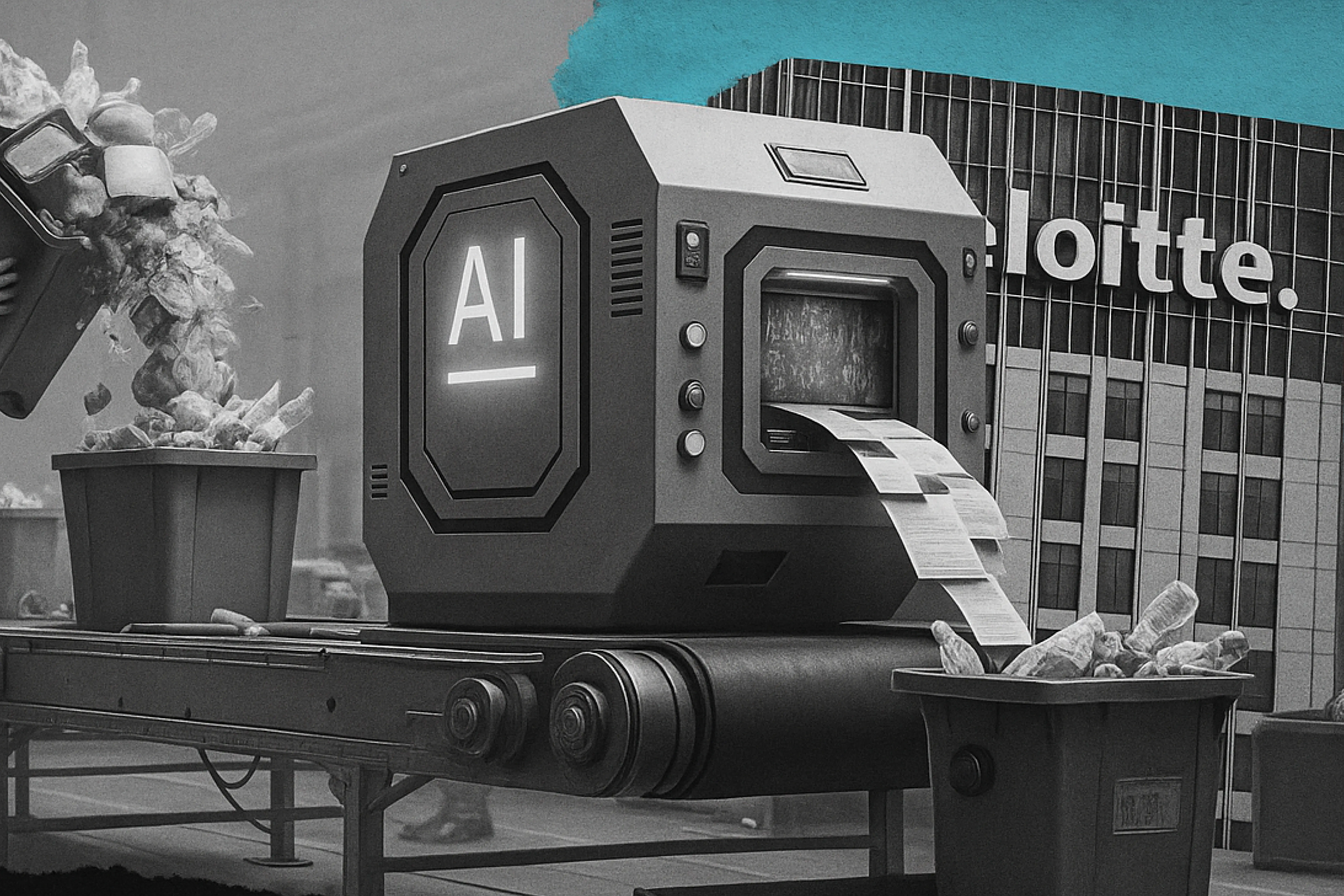

When news broke that Deloitte Australia would refund the Australian government with $440,000 after submitting an AI-generated report containing fabricated information, it wasn’t just a public embarrassment; it was a warning shot for every organization using AI without evaluation.

The case exposed a fundamental truth: AI without proper evaluation is a liability, not a tool for innovation.

According to reports from ABC News and The Guardian (October 2025), Deloitte was commissioned by the Australian Department of Employment and Workplace Relations to produce a 237-page report on automated welfare compliance systems. What appeared to be a thorough analysis later turned out to include fabricated quotes, references to non-existent academic studies, and even invented judicial citations.

Deloitte admitted that the report had used “a generative artificial intelligence (AI) large language model (Azure OpenAI GPT – 4o) based tool chain” (The Guardian, October 2025) in its production.

This challenge reflects a broader industry shift, one we’ve explored in The Complete Guide to Agentic AI: Implementation, Benefits, and Strategic Considerations.

The danger of unchecked AI

Generative AI can produce convincing, confident answers, even when they’re wrong.

These “hallucinations” are not technical glitches; they’re a direct result of deploying AI systems without structured evaluation, clear success criteria, or human oversight.

In Deloitte’s case, the AI-generated content made its way into an official government report, compromising credibility and public trust.

This isn’t an isolated event; it’s a symptom of a broader issue across industries, rushing to integrate AI without the safeguards needed for reliability.

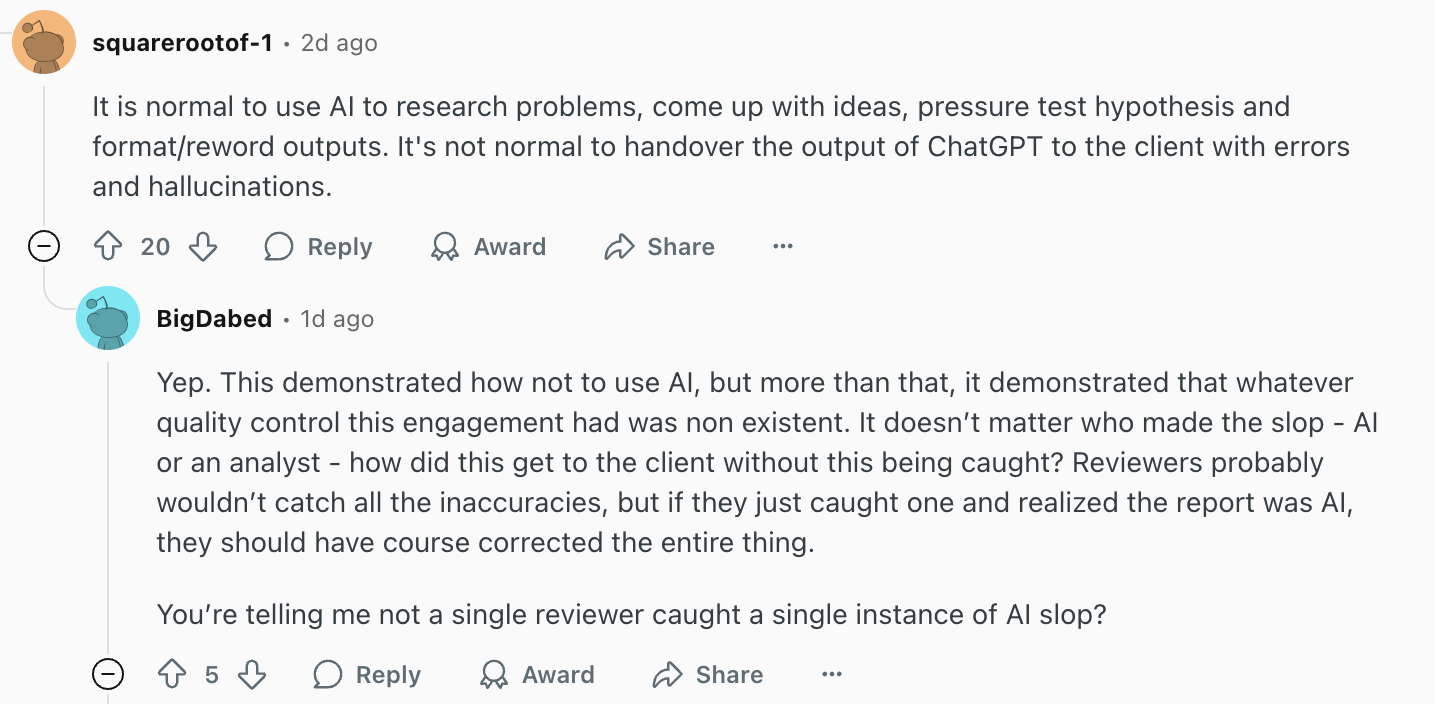

Public reactions emphasize that using AI is normal, but delivering unverified outputs isn’t.

The problem with generic AI

The Deloitte incident highlights a critical misconception: AI is not one-size-fits-all.

Generic models, even when trained on large datasets, lack the domain-specific context and guardrails necessary for high-stakes applications.

When organizations rely on off the shelf AI systems, they inherit not only their power but also their uncertainty.

These models are optimized for generality, not precision, meaning they can generate fluent but false content, especially in highly regulated industries like law, healthcare, or finance.

Without proper evaluation layers, human review, and contextual changes, generic AI becomes a risk amplifier. It scales not just efficiency but also potential errors, as seen in Deloitte’s case, where unchecked outputs slipped through layers of review and entered a government report.

The lesson? Generic AI may accelerate production, but it cannot guarantee truth.

Why AI evaluation matters

The solution isn’t to abandon AI, it’s to make it trustworthy.

That begins with evaluation-driven AI development, where every AI system is tested, monitored, and improved through measurable criteria and human feedback before reaching production.

At Linnify, we’ve developed a six-step evaluation framework that ensures each AI system (whether based on large language models or custom agentic architectures) is safe, explainable, and aligned with real-world objectives:

- Define expectations

Collect real-world input/output examples and align on success criteria. - Set measurable metrics

Accuracy, completeness, latency, and domain-specific KPIs. - Internal evaluation

Test on edge cases and corner conditions. - Human-in-the-loop testing

Experts review and refine AI reasoning. - Client evaluation dashboard

Provides full transparency into model performance and behavior. - Continuous improvement

Real-time observability and post-deployment feedback loops.

This process turns AI from a static model into an adaptive, accountable system.

It’s how we ensure agentic AI and LLM-based solutions operate safely in production environments, reaching 95%+ accuracy across client use cases in retail, finance, and lifestyle sectors.

Human-in-the-loop: where accountability meets intelligence

One of the biggest misconceptions about AI is that automation should eliminate human involvement. The Deloitte case shows the opposite: humans must remain part of the process.

Other Reddit users point out that AI literacy and human oversight are essential to prevent similar failures.

Instead of replacing human judgment, we amplify it, ensuring that experts remain involved in defining success, interpreting outcomes, and refining AI behavior.

This approach directly prevents the kind of unchecked automation that led to Deloitte’s errors. It creates a transparent feedback cycle between humans and machines, where decisions are explainable, measurable, and improvable.

The result? AI that’s not just capable, but credible.

Why evaluation matters for LLMs and agentic systems

As large language models and agentic AI systems become central to business operations, evaluation frameworks are essential for maintaining accuracy, safety, and fairness.

Evaluation transforms generative AI from “it seems right” to “we know it’s right.”

It bridges the gap between LLM capability and enterprise reliability, ensuring that every generated response, prediction, or recommendation aligns with truth, compliance, and user intent.

This is especially critical in the era of AI agents that operate autonomously across workflows. Without observability layers, companies risk scaling uncertainty instead of intelligence.

Building trust in the age of AI

Organizations today face a pivotal decision: deploy AI fast and risk credibility, or deploy it responsibly and earn trust. The Deloitte case shows what happens when oversight is treated as an afterthought.

As AI systems influence decisions in governance, finance, healthcare, and beyond, trust and transparency are the new benchmarks of success. Evaluation isn’t optional; it’s the foundation for sustainable AI adoption.

The way forward

AI innovation must evolve hand in hand with accountability, observability, and evaluation.

The companies that lead the next wave of AI transformation will be those that build systems people can understand and trust.

The Deloitte report incident is a warning sign, but it also marks a turning point: the industry now understands that responsible AI isn’t just ethical, it’s operationally essential.

Because in the race to innovate, trust isn’t a byproduct of AI, it’s the ultimate measure of progress. This is a reminder that trust can’t be automated.

If you’re building with AI, let’s make sure you’re building something reliable.

Contributors

Speakers

Guest

Host

Immerse yourself in a world of inspiration and innovation – be part of the action at our upcoming event

Download

the full guide

Let’s build

your next digital product.

Subscribe to our newsletter

YOU MIGHT ALSO BE INTERESTED IN

YOU MIGHT ALSO BE INTERESTED IN

.webp)