Crossing the GenAI divide: Why your Agentic AI success depends on the right partner

RegisterListen now

at

Key Takeaways

If you’re still not familiar with it, Agentic AI represents the next frontier in intelligent systems, autonomous agents capable of perceiving their environment, making decisions, and taking actions to achieve specific goals with minimal human oversight.

A new MIT study reveals why 95% of enterprise AI initiatives fail, and how choosing the right development partner is your key to crossing the divide.

Why are 95% of enterprise AI Projects failing?

The outcomes are so divided that researchers have coined a new term, the GenAI Divide, to describe the chasm between those achieving millions in value and the vast majority stuck with no measurable P&L (Profit and Loss) impact.

The research, based on structured interviews with 52 organizations, surveys with 153 senior leaders, and analysis of over 300 public AI implementations, paints a clear picture:

"Just 5% of integrated AI pilots are extracting millions in value, while the vast majority remain stuck with no measurable P&L impact." (pg. 3)

For leading brands evaluating AI investments, this isn't just another technology trend; it's a strategic imperative that will determine competitive advantage in the next decade.

What makes external AI partnerships 2x more successful than internal development?

The data reveals a critical insight that challenges conventional wisdom about AI development.

While many organizations assume building AI capabilities internally offers more control and customization, the research shows the opposite is true.

"Our research reveals a steep drop-off between investigations of GenAI adoption tools and pilots and actual implementations, with significant variation between generic and custom solutions." (pg. 6)

Specifically, only 5% of custom enterprise AI tools reach production, compared to much higher adoption rates for simple, consumer-grade tools.

The reasons for this failure aren't technical, they're structural.

As the study notes: "The 95% failure rate for enterprise AI solutions represents the clearest manifestation of the GenAI Divide. Organizations stuck on the wrong side continue investing in static tools that can't adapt to their workflows, while those crossing the divide focus on learning-capable systems." (pg. 7)

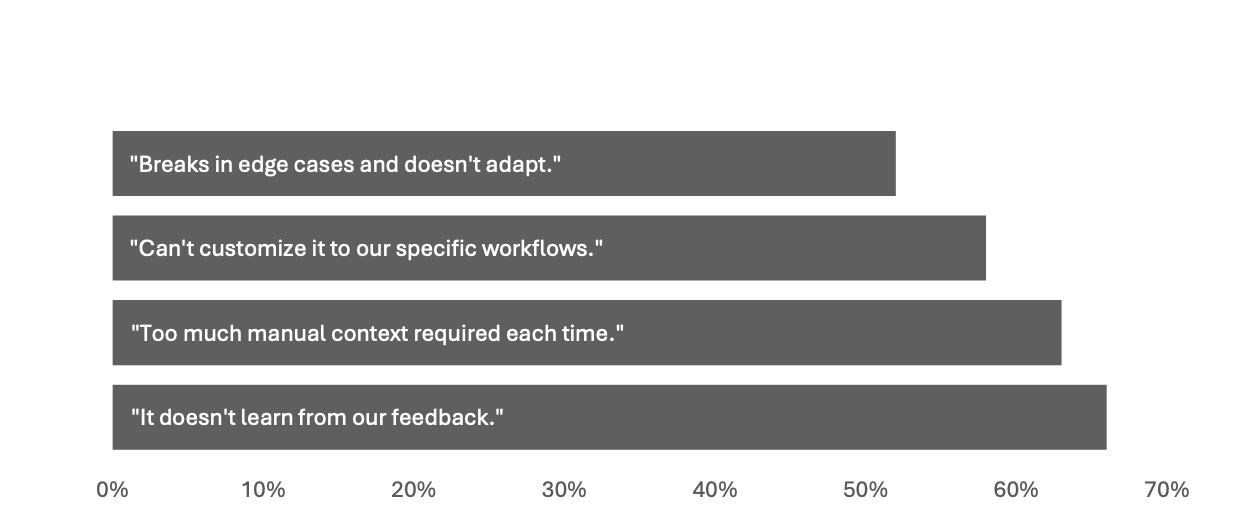

What are the 3 critical gaps that can kill any internal AI project?

1. The learning gap

Most internally built AI systems fail because they don't learn from feedback or adapt to changing workflows.

"The primary factor keeping organizations on the wrong side of the GenAI Divide is the learning gap, tools that don't learn, integrate poorly, or match workflows." (pg. 10)

2. The integration challenge

Internal teams often underestimate the complexity of integrating AI into existing workflows.

Users consistently report that enterprise AI tools are "brittle, overengineered, or misaligned with actual workflows" (pg. 7), while the same users praise consumer tools like ChatGPT for their flexibility and responsiveness.

3. The memory problem

The study reveals that "ChatGPT's very limitations reveal the core issue behind the GenAI Divide: it forgets context, doesn't learn, and can't evolve. For mission-critical work, 90% of users prefer humans." (pg. 12)

This highlights why building learning-capable, contextual AI systems requires specialized expertise.

Which companies successfully implement Agentic AI and what Do they do differently?

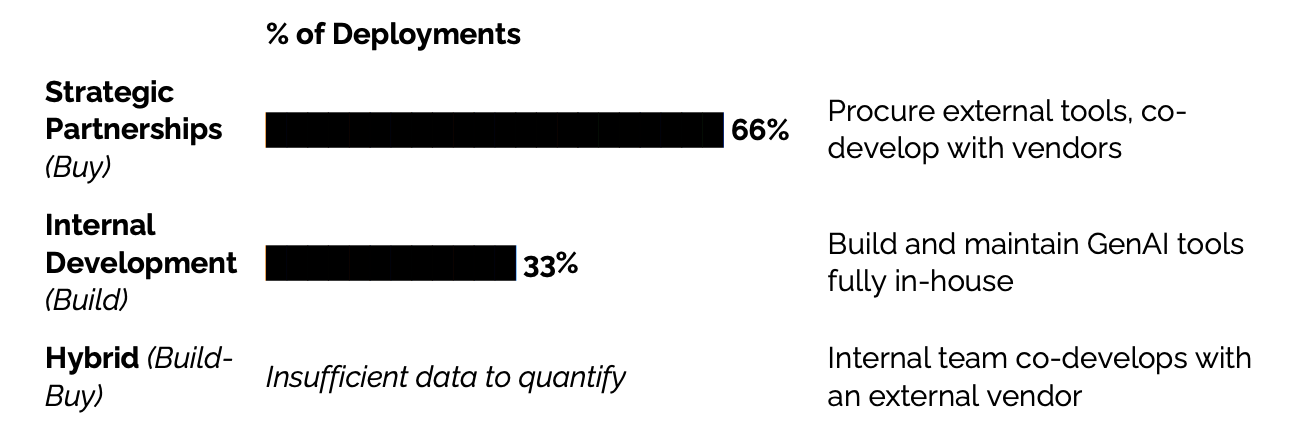

The most striking finding in the research concerns the success rates of different development approaches.

Organizations that partner with external AI specialists achieve dramatically better outcomes than those building internally.

"In our sample, external partnerships with learning-capable, customized tools reached deployment ~67% of the time, compared to ~33% for internally built tools." (pg. 19)

This represents a 2x success rate advantage for strategic partnerships over internal development.

The research identifies three key organizational structures for GenAI implementation, with clear performance differences:

- Strategic partnerships (Buy): 66% deployment success rate - "Procure external tools, co-develop with vendors"

- Internal development (Build): 33% deployment success rate - "Build and maintain GenAI tools fully in-house"

- Hybrid (Build-Buy): Insufficient data to quantify success rates (pg. 19)

Why partnerships outperform internal builds

Domain expertise at scale

External partners bring specialized knowledge that internal teams often lack.

"The most successful startups addressed both the desire for learning systems and the skepticism around new tools by executing two strategies: Customizing for specific workflows [and] Leveraging referral networks." (pg. 16)

Proven validation frameworks

Leading AI development partners use validation-driven approaches that reduce risk.

"Tools that succeeded shared two traits: low configuration burden and immediate, visible value. In contrast, tools requiring extensive enterprise customization often stalled at pilot stage." (pg. 16)

Faster time-to-value

The research shows that external partnerships consistently deliver faster implementation timelines. "Top performers reported average timelines of 90 days from pilot to full implementation. Enterprises, by comparison, took nine months or longer." (pg. 7)

How do you choose the right Agentic AI development partner in 2025?

The study reveals that organizations successfully crossing the GenAI Divide approach AI procurement differently than traditional software purchases.

They act "like BPO clients, not SaaS customers. They demand deep customization, drive adoption from the front lines, and hold vendors accountable to business metrics." (pg. 18)

What should you look for in an Agentic AI development partner?

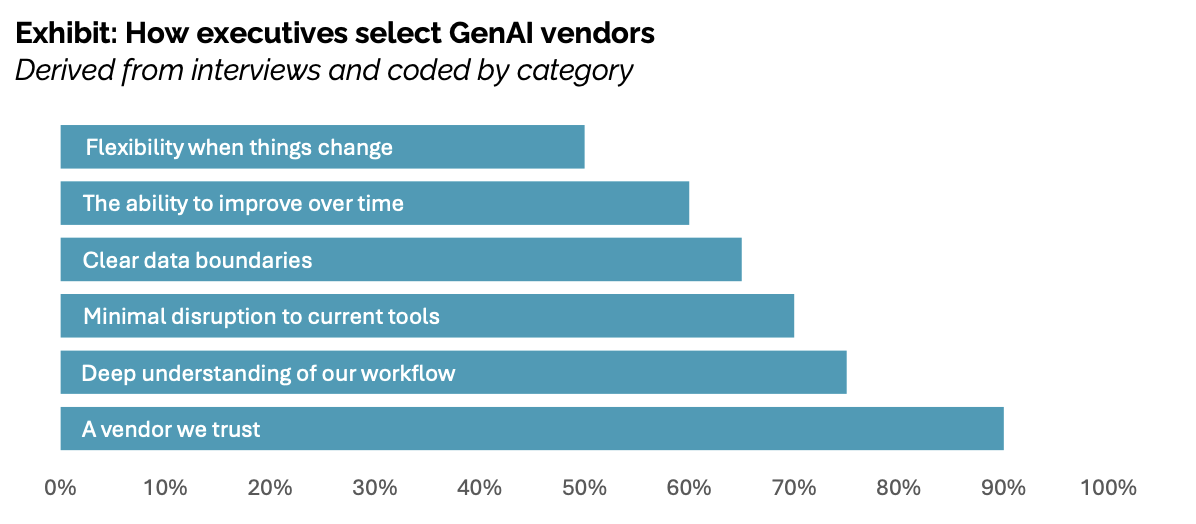

Based on interviews with successful AI buyers, the research identifies what executives actually prioritize when selecting AI partners:

- A vendor we trust

"We're more likely to wait for our existing partner to add AI than gamble on a startup." (pg. 15)

- Deep understanding of our workflow

"Most vendors don't get how our approvals or data flows work" (pg. 15)

- Minimal disruption to current tools

"If it doesn't plug into Salesforce or our internal systems, no one's going to use it." (pg. 15)

- Clear data boundaries

"I can't risk client data mixing with someone else's model, even if the vendor says it's fine." (pg. 15)

- The ability to improve over time

"It's useful the first week, but then it just repeats the same mistakes. Why would I use that?" (pg. 15)

- Flexibility when things change

"Our process evolves every quarter. If the AI can't adapt, we're back to spreadsheets." (pg. 16)

What is the GenAI divide, and how can organizations cross it?

The research reveals an urgent timeline consideration for organizations evaluating AI partnerships. "The window for crossing the GenAI Divide is rapidly closing. Enterprises are locking in learning-capable tools." (pg. 18)

"In the next few quarters, several enterprises will lock in vendor relationships that will be nearly impossible to unwind. This 18-month horizon reflects consensus from seventeen procurement and IT sourcing leaders we interviewed." (pg. 18)

The study emphasizes that "Organizations investing in AI systems that learn from their data, workflows, and feedback are creating switching costs that compound monthly." (pg. 18)

This makes the choice of development partner not just a tactical decision, but a strategic commitment that will shape AI capabilities for years to come.

Linnify: Your bridge across the GenAI divide

At Linnify, we understand the critical factors that separate successful AI initiatives from the 95% that fail to deliver value.

Our comprehensive Agentic AI Development capabilities are specifically designed to help leading brands cross the GenAI divide and accelerate their digital transformation initiatives.

Our validation-driven Agentic AI framework

Our four-phase approach directly addresses the learning gap that keeps most organizations trapped:

Discover: We co-define the agent’s role, responsibilities, persona, and KPIs, establishing comprehensive evaluation metrics and success criteria that align with your business objectives from day one.

Validate: We build functional PoCs using frameworks like LangChain and RAG, developing custom evaluation datasets and implementing both static and dynamic AI evaluators alongside human-in-the-loop testing to prove measurable value before scaling.

Scale: We productize AI agents with full system integration, performance optimization, and ethical guardrails, delivering the learning-capable, contextual systems the research identifies as critical for success.

Iterate: We implement continuous refinement based on live data and user feedback, using our established evaluation metrics and AI-powered assessment tools to ensure your AI systems evolve, improve performance, and maintain quality over time.

Why Linnify is your best partner choice when it comes to AI?

- Proven external partnership model

As the MIT research demonstrates, external partnerships achieve 2x the success rate of internal builds. Our track record as a trusted AI development partner positions us to help you join the successful 5% rather than the struggling 95%.

- End-to-end expertise

From data engineering to AI deployment, we provide the comprehensive capabilities needed to build learning-capable, integrated AI systems that actually work in production environments.

- Responsible & scalable AI

We implement best practices for data privacy, security, and ethical AI, ensuring your solutions meet the compliance requirements that successful enterprises demand.

The time to act is NOW

The MIT research makes clear that the GenAI Divide isn't permanent, but crossing it requires fundamentally different choices about partnerships and technology.

"For organizations currently trapped on the wrong side, the path forward is clear: stop investing in static tools that require constant prompting, start partnering with vendors who offer custom systems, and focus on workflow integration over flashy demos." (pg. 23)

The window for establishing successful AI partnerships is narrowing rapidly.

Organizations that act now to partner with proven AI development specialists will establish the learning-capable systems needed to capture millions in value.

Those who delay or continue with failed internal approaches will remain trapped on the wrong side of the divide.

Ready to cross the GenAI Divide?

Contact Linnify today to discover how our Agentic AI development can transform your organization from an AI experimenter into an AI leader.

This analysis is based on "The GenAI Divide: State of AI in Business 2025" by MIT Project NANDA, a comprehensive study of AI implementation across enterprise organizations. The research methodology included structured interviews with 52 organizations, surveys with 153 senior leaders, and systematic analysis of over 300 public AI initiatives.

Contributors

Speakers

Guest

Host

Immerse yourself in a world of inspiration and innovation – be part of the action at our upcoming event

Download

the full guide

Let’s build

your next digital product.

Subscribe to our newsletter

YOU MIGHT ALSO BE INTERESTED IN

YOU MIGHT ALSO BE INTERESTED IN

.webp)